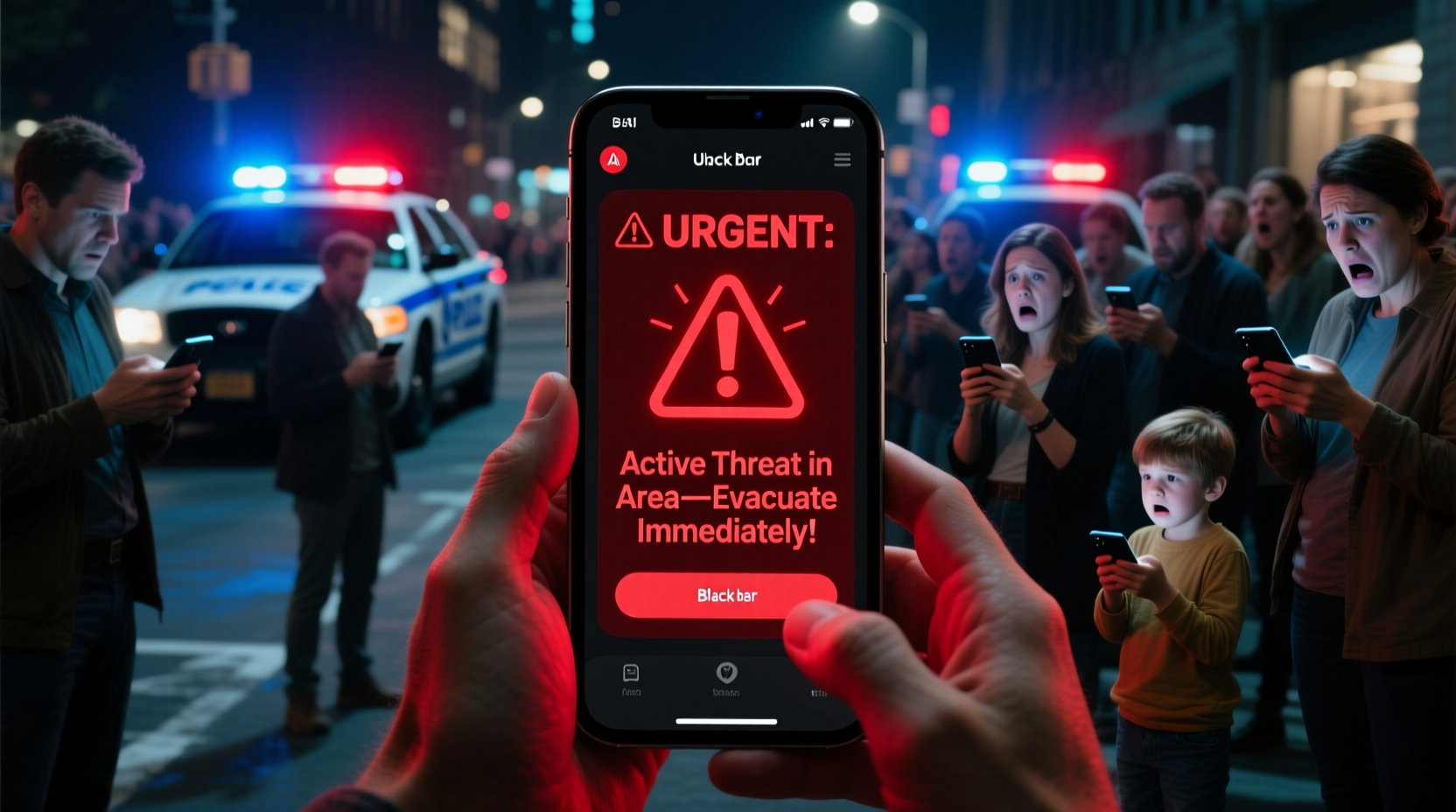

CrimeRadar, an AI-powered mobile app that converts publiсly available U.S. police radio traffic into real-time alerts, has reportedly apolоgized after false crime notifications caused alarm in several American communities.

Key Points

- CrimeRadar misinterpreted police radio traffic, issuing false crime alerts that caused community panic.

- The app has over 2 million users nationwide and 700,000 downloads last month.

- Updates to audio processing and user-submitted corrections aim to improve accuracy and prevent future errors.

“We have been made aware of some serious transcription issues, which have resulted in the dissemination of inaccurate information,” CrimeRadar said in a statement to BBC Verify. “We understand the impact that a false report can have on the community. We apologize for any distress or disruption this caused,” it added.

A BBC Verify investigation reported that in December, CrimeRadar users in Bend, Orеgon were sent an alert suggesting a police vehicle had triggered a “man down” alarm, a signal typically associated with a seriously injured or shot officer. The notification prompted residents to seek confirmation through social media and local channels, where they later found that no shooting or officer injury had occurred.

CrimeRadar operates by using artificial intelligence to scan publicly accessible police radio transmissions across the United States, converting live audio into written transcripts that are then analyzed to generate crime alerts for users. The app is designed to provide near-real-time updates on local law enforcement activity, allowing users to stay informed about incidents in their communities, but its reliance on automated interpretation has raised concerns about accuracy and context. CrimeRadar is reported to have over 2 million users nationwide, with as many as 700,000 downloads recorded just last month.

Related: OpenAI Debated Police Call Before Canada Mass Shooting Suspect Chats Online

BBC Verify reported that the incident in Bend stemmed from an error in the app’s automated transcription system, which misinterpreted police radio traffic containing the phrases “man down alarm” and “shot with the cop,” leading CrimeRadar to issue an inaccurate alert. In reality, authorities said the alert was triggered after an officer accidentally activated an alarm while attending a “Shop With the Cop” charity event, and no violent incident had taken place.

“This is everyone’s worst nightmare,” Bend Police Communications Manager, Sheila Miller, stated in an interview with BBC Verify. Miller noted that CrimeRadar has produced multiple instances of inaccurately reporting “calls for servicе” within communities. She added that these fаlse alerts can create unnecessary fear, leaving residents with the impression that their neighborhoods are less safe than they actually are.

BBC Verify claims that it has found evidence of the app repeatedly sending out “misleading, inaccurate, or false crime alerts.”

Related: Kusama Reveals Details Of New AI Product in Recent Livestream

Furthermore, CrimeRadar stated that it has upgraded its audio processing protocols to reduce transcription errors and will introduce features enabling agencies and community members to submit corrections and provide additional context to alerts.

The CrimeRadar incident spotlights the delicate balance between technological innovatiоn and public responsibility. As AI tools increasingly influence daily life, developers must ensure accuracy, implement safeguards, and maintain oversight to prevent unintended harm. Striking this balance is essential for building trust, protecting communities, and demonstrating that cutting-edge technology can serve the public without compromising safety or reliability.